Taking your Integration Tests to the next level with Testcontainers

With a simple API and seamless integration with popular testing frameworks like Testify, Testcontainers makes it easy to orchestrate complex dependencies, so you can focus on writing great idiomatic tests. Be prepared to say goodbye to flaky integration tests and hello to faster feedback regardless of the environment.

Why do developers test their applications? One reason is that developers must safeguard the applications from their own changes.

Professional developers test their code. Martin, The Clean Coder (2011).

Testing shows the presence, not the absence, of bugs. Dijkstra.

The quotes highlight the importance and essence of testing, acknowledging that tests can reveal issues but not guarantee that your program is correct.

Tests are an invaluable investment that enables developers to confidently assess and enhance their code, increasing productivity. Remember that tests should be understandable, easy to maintain, and flexible to evaluate - like the application code.

Testing Strategies

Patience, young grasshopper. Before diving into the Testcontainers project, let's understand the Testing Strategies and how Integration Tests fit into them.

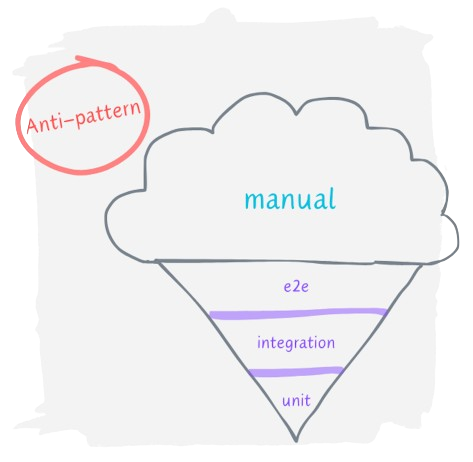

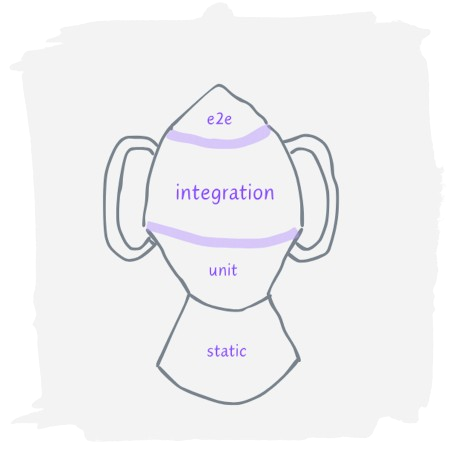

The Ice Cream Cone

The Ice Cream Cone focuses a lot on manual testing and, in general, on testing an environment that mimics as closely as possible the production environment. Although dev and prod parity is definitely not bad, this approach is also the opposite of testing in isolation, making it much more difficult to test details and edge cases we are still interested in covering. As the application grows, more QAs are required to think about the scenarios and perform the tests manually. Can you see how this is error-prone and nonscalable?

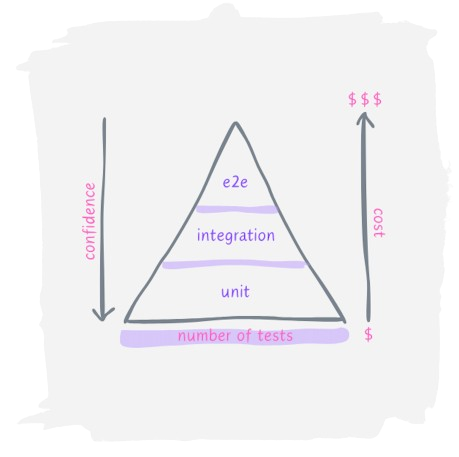

The Pyramid

The not-so-cool Test Pyramid. I still remember the first time I saw the Tests Pyramid. My very first thought was: “Holy Moly, it is so cool!” However, as Stephen H Fishman mentioned in this article, “Testing is Good. Pyramids are Bad. Ice Cream Cones are the Worst”. So, I've changed my mind a bit. Although the pyramid strategy is better than the previous model discussed, it is unsuitable for many modern applications. At the base of the pyramid are the unit tests, which are considered low-cost. They are cheaper to develop and faster to execute. Something implicit in the pyramid is that as the cost increases, confidence also increases, and confidence is an essential aspect of any test suite.

The pyramid is based on the assumption that broad-stack tests are expensive, slow, and brittle compared to more focused tests, such as unit tests. While this is usually true, there are exceptions. If my high-level tests are fast, reliable, and cheap to modify - then lower-level tests aren't needed. Fowler, martinfowler.com (2016).

Let's analyze Martin Fowler's note. Our test suite can provide a high confidence level at a lower cost since the broad-stack tests are cheaper, such as the unit tests. Moreover, there's no need to have multiple tests validating the same scenarios only to watch them fail for the same reasons. Don't be repetitive. To be clear, I am not discarding the unit tests. It's all about balance.

Integration Tests = confidence + +, mocks - -

Integration tests determine if independently developed units of software work correctly when they are connected to each other. Fowler, martinfowler.com (2018).

Applications with Sonar gates requiring 80-90% code coverage do not guarantee high-quality software; they ensure elevated coverage. There is a big difference between quality (strongly related to confidence) and coverage.

When tests are written just to satisfy a certain coverage rate, they lose their value. As Kent C. Dodds said in his awesome post about integration tests, “When you strive for 100% all the time, you find yourself spending time testing things that really don't need to be tested”.

Take a look at the following Go code.

func (s service) Get(ctx context.Context, id int64) (model.User, error) {

return s.repository.find(ctx, id)

}

Let's consider a unit test for this function where the repository would be mocked. As there are no business rules to be validated, even though the coverage is increased, the function may still contain a bug that wouldn't be caught. Maintaining tests without a specific existing purpose can be annoying and unproductive.

After discussing with other Togglers, we agreed that integration tests are more effective in preventing developers from making errors.

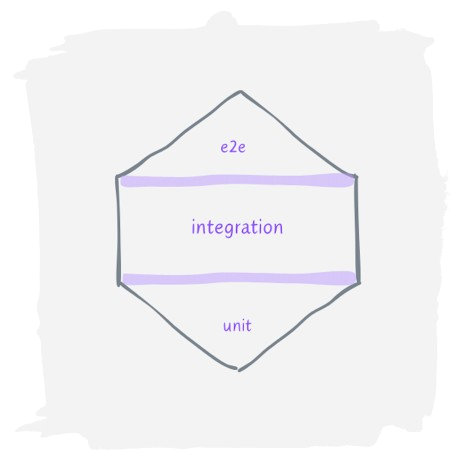

Honeycomb and Trophy

Spotify proposed the honeycomb test strategy that emphasizes integration tests while still including unit and e2e tests, but in a different proportion. It's all about balance, remember? The trophy approach includes static analysis, but it also gives priority to integration tests.

Testcontainers

Testcontainers is the correct spelling (yes, lowercase c) and a singular word. Testcontainers is not about “testing containers” but “testing with containers”. The central concept of the framework is to manage and run integration tests using Docker-based test dependencies through a straightforward API.

All of this is self-contained and orchestrated within the test context. Simple and fast as unit tests, with no mocks! As a developer, executing integration tests through my favorite IDE, with no extra steps, is delightful.

Richard North started this open-sourced project in 2015, recognizing the potential for success when Docker was becoming increasingly consolidated across the software community. The first version was released for Java and that is currently the most feature-rich one. Nowadays, Testcontainers supports many other languages: Go, .NET, Node.js, Python, Rust, Haskell, Ruby, Clojure, and Elixir. He created the solution primarily to solve his own pain.

We've achieved great outcomes by using the Testcontainers in one of our modules. This approach has allowed us to eliminate the need for READMEs, scripts, make commands (which include a particular execution order), and other extra steps to run integration tests. This is particularly helpful for new employees unfamiliar with the project.

It also allowed us to remove the additional steps to manage the containers' lifecycle from our pipeline.

We could improve the module's test execution performance by ~35% compared to the old suite in our pipeline. The old suite has many dependencies, some of which are optional for the module. These steps consume a lot of time. Below are the numbers of a POC.

Sample tests running in the old suite

| Step | Time to execute |

|---|---|

| Start dependencies | 40s-1min |

| Tests execution | 3m 50s |

Sample tests running in the Testcontainers suite

| Step | Time to execute |

|---|---|

| Tests execution + self-contained dependencies | 3m |

To run the tests using Testcontainers in your pipeline, you only need to have Docker installed, which is already included in GitHub Actions workers by default.

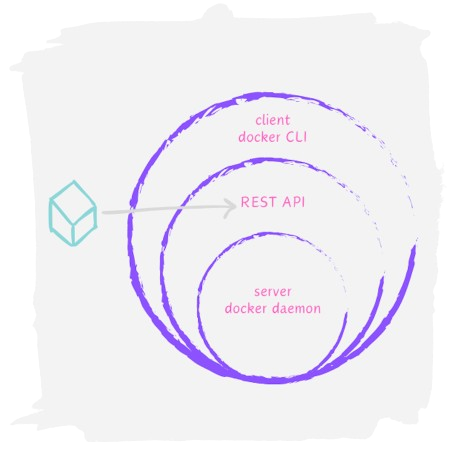

How does Testcontainers integrate with Docker?

This is a succinct representation of the Docker architecture. The Docker CLI uses the API REST to manage the objects. Whenever a Docker command is executed, it indirectly invokes this API. Testcontainers directly invokes Docker API, making it simple and flexible to communicate with Docker through code, considering an HTTP client is available in many different languages. The Testcontainers uses the docker client available in the Go packages to do this. Simple and direct!

Testcontainers and Go

The primary language employed in the development of Toggl Track is Go. The Go library offers some modules, with Postgres being the most popular. The modules aim to simplify usage across various technologies, abstracting away many configurations. There are a bunch of other interesting modules besides Postgres, such as MySQL, Redis, Elasticsearch, Kafka, and k3s. Among these, k3s is particularly noteworthy as it is a lightweight Kubernetes cluster, which could prove helpful for teams working with operators or similar projects. You can launch any other containers using the Generic Container implementation if you have a generated image. Certain containers require initialization, such as database migrations. One way to perform migrations is to retrieve the host and port and execute them through your migration library. If this is not possible, you can use the WithInitScripts function. If your tests require multiple dependencies, guess what? You can also use docker-compose with test Containers.

Show me the code!

Here, you can find a simple demo application that uses Postgres to perform some tests. If you have come this far, you know you won't need much to run the tests.

Let's go over the crucial points of the linked application.

- There is a simple CRUD around the User entity.

type User struct {

bun.BaseModel `bun:"table:users,alias:u"`

ID *int64 `bun:"id,pk" json:"id"`

Name string `bun:"name" json:"name"`

Surname string `bun:"surname" json:"surname"`

Age int64 `bun:"age" json:"age"`

}

I chose Bun just to demonstrate how easy it is to set up migrations using your favorite library.

- There is also a simple repository and service. Nothing new so far.

type service struct {

repository Repository

}

...

func (s service) Create(ctx context.Context, user model.User) (*int64, error) {

return s.repository.save(ctx, user)

}

func (s service) Get(ctx context.Context, id int64) (model.User, error) {

return s.repository.find(ctx, id)

}

func (s service) List(ctx context.Context) ([]model.User, error) {

return s.repository.findAll(ctx)

}

- The goal is to test the service layer integrated with the repository. And now, this is the Postgres configuration:

type PostgresDatabase struct {

instance *postgres.PostgresContainer

}

func NewPostgresDatabase(t *testing.T, ctx context.Context) *PostgresDatabase {

pgContainer, err := postgres.RunContainer(ctx,

testcontainers.WithImage("postgres:12"),

postgres.WithDatabase("test"),

postgres.WithUsername("postgres"),

postgres.WithPassword("postgres"),

testcontainers.WithWaitStrategy(

wait.ForLog("database system is ready to accept connections").

WithOccurrence(2).

WithStartupTimeout(5*time.Second),

),

)

require.NoError(t, err)

return &PostgresDatabase{

instance: pgContainer,

}

}

func (db *PostgresDatabase) DSN(t *testing.T, ctx context.Context) string {

dsn, err := db.instance.ConnectionString(ctx, "sslmode=disable")

require.NoError(t, err)

return dsn

}

func (db *PostgresDatabase) Close(t *testing.T, ctx context.Context) {

require.NoError(t, db.instance.Terminate(ctx))

}

The code is pure Go and has parameters similar to Docker, making it easier to understand. First, we define the image tag, the database name, user, and password. The Wait Strategy indicates if the container is ready to receive requests. There is also the option to run the database migrations informing the SQL files through the WithInitScripts function. Note that we have been using the Postgres module.

The wrapper PostgresDatabase was created only to facilitate the use in the suites.

- Finally, here is the suite test.

func TestSuite(t *testing.T) {

suite.Run(t, new(IntegrationTestSuite))

}

type IntegrationTestSuite struct {

suite.Suite

db *bun.DB

container *integration.PostgresDatabase

}

func (s *IntegrationTestSuite) SetupSuite() {

ctx, cancel := context.WithTimeout(context.Background(), time.Minute)

defer cancel()

s.setupDatabase(ctx)

}

func (s *IntegrationTestSuite) TearDownSuite() {

ctx, cancel := context.WithTimeout(context.Background(), time.Minute)

defer cancel()

s.container.Close(s.T(), ctx)

}

func (s *IntegrationTestSuite) setupDatabase(ctx context.Context) {

s.container = integration.NewPostgresDatabase(s.T(), ctx)

sqldb := sql.OpenDB(pgdriver.NewConnector(pgdriver.WithDSN(s.container.DSN(s.T(), ctx))))

s.db = bun.NewDB(sqldb, pgdialect.New())

s.migrate(ctx)

}

func (s *IntegrationTestSuite) migrate(ctx context.Context) {

err := s.initDatabase(ctx)

require.NoError(s.T(), err)

migrations := &migrate.Migrations{}

err = migrations.Discover(os.DirFS("../../migrations"))

require.NoError(s.T(), err)

migrator := migrate.NewMigrator(s.db, migrations)

_, err = migrator.Migrate(ctx)

require.NoError(s.T(), err)

}

func (s *IntegrationTestSuite) initDatabase(ctx context.Context) error {

type hack struct {

bun.BaseModel `bun:"table:bun_migrations"`

*migrate.Migration

}

_, err := s.db.NewCreateTable().Model((*hack)(nil)).Table("bun_migrations").Exec(ctx)

require.NoError(s.T(), err)

return nil

}

So, the database was initialized, including the migrations executed using the Bun. These migrations would represent the ones used in production. This is the main idea: get as close as possible to the production environment.

- The tests are straightforward, like this example. Notice that the database instance came from the integration test suite.

func (s *IntegrationTestSuite) TestCreate() {

userService := NewService(NewRepository(s.db))

s.Run("service should create the user successfully", func() {

// Given

user := model.User{

Name: "John",

Surname: "Smith",

Age: 45,

}

// When

id, err := userService.Create(context.Background(), user)

// Then

s.NoError(err)

s.NotNil(id)

})

}

Alternatives

Dear reader, you may consider exploring other options besides Testcontainers. Let's look at some alternatives.

-

If a project's only external dependency is the database, it is worth using an in-memory database to run the tests. It's important to note that it is also a mock. Ultimately, the tests do not deal with the actual database instance. They may not always reflect the database's real behavior, which can lead to false positives. A few years back, I worked on a project that involved using MySQL and an in-memory database to conduct tests. I implemented a change, and the developed tests worked fine with the newly inserted index. However, I was bombarded with errors when the code was deployed in the development environment. It turned out that MySQL didn't support the type of index I had used, although it was allowed in the in-memory database used to perform the tests, leading me to a false positive.

-

It is possible to run the tests mostly with docker. Absolutely, it is. On the other hand, it can also generate other problems. Once upon a time, a developer was running integration tests when they suddenly stopped working. The developer tried restarting everything, thinking the problem might be with their machine. However, after two hours of troubleshooting, they discovered that a new container was required to execute the test due to a new dependency that another team had inserted in the test suite.

Conclusion

Initially, I planned to split this into two sections: Upsides and Downsides. But truth be told, I couldn't round up enough downsides to warrant a separate section. So, if you've hit a snag with Testcontainers, let's chat! I'm all ears (or eyes). Alright, let's highlight the characteristics of the library:

- Simple to implement tests, evaluate, and give maintenance

- Easy to run them locally or in the CI/CD execution

- Waiting Strategies

- Random port allocation in containers to prevent conflicts

- Fast feedback

- Productivity

- Flexibility

- Self-contained tests

- Isolation

That is all, folks!